728x90

2021.6.18

경남 테크노파크 스마트팩토리 인공지능 과정에서 CNN을 학습한 후 예제들을 활용하여 각자 원하는 데이터를 가지고 실습을 진행하였다.

내가 진행한 간단한 프로젝트는 마블 캐릭터 3명을 골라서 분류하는 것이다.

1. 마블 캐릭터 이미지를 kaggle(https://www.kaggle.com/hchen13/marvel-heroes)에서 다운로드 받았다.

2. 데이터가 정리되지 않은 부분이 많아서 데이터들을 걸러내었다.

3. 데이터들을 폴더에 정리하였다.

4. 코드 작성

Marvel Heroes (Using CNN)

In [ ]:

import os

import zipfile

import urllib.request

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import tensorflow as tf

from tensorflow import keras

from keras.models import Sequential

from keras.layers import Flatten, Dense, Conv2D, MaxPooling2D, Dropout

from keras.preprocessing.image import ImageDataGenerator

from keras import optimizers

(1) 데이터 로드

구글 drive mount.

In [ ]:

from google.colab import drive

drive.mount('/content/drive')

Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount("/content/drive", force_remount=True).

In [ ]:

pwd

Out[ ]:

'/content/drive'데이터가 있는 디렉토리로 이동.

marvel 디렉토리에 저장된 학습 이미지는 black_widow, spiderman, captain america 각각 200~300개 정도, 전체 745개

In [ ]:

black_widow_dir = os.path.join('/marvel/train/black_widow')

spider_man_dir = os.path.join('/marvel/train/spider-man')

captain_america_dir = os.path.join('/marvel/train/captain_america')

print('total training black widow images:', len(os.listdir(black_widow_dir)))

print('total training spiderman images:', len(os.listdir(spider_man_dir)))

print('total training captain america images:', len(os.listdir(captain_america_dir)))

total training black widow images: 206

total training spiderman images: 300

total training captain america images: 239

In [ ]:

black_widow_files = os.listdir(blackwidow_dir)

print(black_widow_files[:5])

spider_man_files = os.listdir(spiderman_dir)

print(spider_man_files[:5])

captain_america_files = os.listdir(captainamerica_dir)

print(captain_america_files[:5])

['pic_034.jpg', 'pic_028.jpg', 'pic_027.jpg', 'pic_025.jpg', 'pic_048.jpg']

['pic_070.jpg', 'pic_069.jpg', 'pic_066.jpg', 'pic_065.jpg', 'pic_064.jpg']

['pic_005.jpg', 'pic_004.jpg', 'pic_003.jpg', 'pic_002.jpg', 'pic_017.jpg']

디렉토리에 저장된 테스트 이미지는 black widow, spiderman, captain america 전체 109개|

In [ ]:

black_widow_test_dir = os.path.join('/marvel/valid/black_widow')

spider_man_test_dir = os.path.join('/marvel/valid/spider-man')

captain_america_test_dir = os.path.join('/marvel/valid/captain_america')

print('total testing rock images:', len(os.listdir(black_widow_test_dir)))

print('total testing scissors images:', len(os.listdir(spider_man_test_dir)))

print('total testing paper images:', len(os.listdir(captain_america_test_dir)))

total testing rock images: 24

total testing scissors images: 52

total testing paper images: 33

In [ ]:

rps = 3

pic_index = 2

next_black_widow = [os.path.join(black_widow_dir, fname) for fname in black_widow_files[:pic_index]]

next_spider_man = [os.path.join(spider_man_dir, fname) for fname in spider_man_files[:pic_index]]

next_captain_america = [os.path.join(captain_america_dir, fname) for fname in captain_america_files[:pic_index]]

fig = plt.gcf()

fig.set_size_inches(pic_index*4, rps*4)

for i, img_path in enumerate(next_black_widow + next_spider_man + next_captain_america):

sp = plt.subplot(rps, pic_index, i + 1)

sp.axis('Off')

img = mpimg.imread(img_path)

plt.imshow(img)

plt.show()

(2) Data Augmentation

In [ ]:

TRAINING_DIR = "/marvel/train"

training_datagen = ImageDataGenerator(

rescale = 1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

train_generator = training_datagen.flow_from_directory(

TRAINING_DIR,

target_size=(150,150),

class_mode='categorical',

batch_size=74

)

Found 745 images belonging to 3 classes.

테스트 데이터는 스케일만 변경

In [ ]:

VALIDATION_DIR = "/marvel/valid"

validation_datagen = ImageDataGenerator(rescale = 1./255)

validation_generator = validation_datagen.flow_from_directory(

VALIDATION_DIR,

target_size=(150,150),

class_mode='categorical',

batch_size=36

)

Found 109 images belonging to 3 classes.

(3) model define

In [ ]:

model = Sequential([

Conv2D(64, (3,3), activation='relu', input_shape=(150, 150, 3)),

MaxPooling2D(2, 2),

Conv2D(64, (3,3), activation='relu'),

MaxPooling2D(2,2),

Conv2D(128, (3,3), activation='relu'),

MaxPooling2D(2,2),

Conv2D(128, (3,3), activation='relu'),

MaxPooling2D(2,2),

Conv2D(128, (3,3), activation='relu'),

MaxPooling2D(2,2),

Flatten(),

Dropout(0.5),

Dense(512, activation='relu'),

Dense(3, activation='softmax')

])

In [ ]:

model.summary()

Model: "sequential_5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_23 (Conv2D) (None, 148, 148, 64) 1792

_________________________________________________________________

max_pooling2d_23 (MaxPooling (None, 74, 74, 64) 0

_________________________________________________________________

conv2d_24 (Conv2D) (None, 72, 72, 64) 36928

_________________________________________________________________

max_pooling2d_24 (MaxPooling (None, 36, 36, 64) 0

_________________________________________________________________

conv2d_25 (Conv2D) (None, 34, 34, 128) 73856

_________________________________________________________________

max_pooling2d_25 (MaxPooling (None, 17, 17, 128) 0

_________________________________________________________________

conv2d_26 (Conv2D) (None, 15, 15, 128) 147584

_________________________________________________________________

max_pooling2d_26 (MaxPooling (None, 7, 7, 128) 0

_________________________________________________________________

conv2d_27 (Conv2D) (None, 5, 5, 128) 147584

_________________________________________________________________

max_pooling2d_27 (MaxPooling (None, 2, 2, 128) 0

_________________________________________________________________

flatten_5 (Flatten) (None, 512) 0

_________________________________________________________________

dropout_5 (Dropout) (None, 512) 0

_________________________________________________________________

dense_10 (Dense) (None, 512) 262656

_________________________________________________________________

dense_11 (Dense) (None, 3) 1539

=================================================================

Total params: 671,939

Trainable params: 671,939

Non-trainable params: 0

_________________________________________________________________

(4) model compile

In [ ]:

model.compile(loss = 'categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

batch * steps_per_epoch = image 개수 train, valid 따로 맞춰줘야함.

(5) 모델 학습

In [ ]:

history = model.fit(

train_generator,

epochs=50,

steps_per_epoch=10,

validation_data = validation_generator,

verbose = 1,

validation_steps=3)

Epoch 1/50

10/10 [==============================] - 17s 1s/step - loss: 1.1146 - accuracy: 0.4042 - val_loss: 1.0595 - val_accuracy: 0.4722

Epoch 2/50

10/10 [==============================] - 15s 1s/step - loss: 1.0506 - accuracy: 0.4192 - val_loss: 0.9280 - val_accuracy: 0.4815

Epoch 3/50

10/10 [==============================] - 15s 1s/step - loss: 0.9742 - accuracy: 0.4872 - val_loss: 1.0504 - val_accuracy: 0.4537

Epoch 4/50

10/10 [==============================] - 14s 1s/step - loss: 1.0113 - accuracy: 0.5226 - val_loss: 0.8303 - val_accuracy: 0.6019

Epoch 5/50

10/10 [==============================] - 15s 2s/step - loss: 1.0152 - accuracy: 0.5777 - val_loss: 0.8096 - val_accuracy: 0.5648

Epoch 6/50

10/10 [==============================] - 15s 2s/step - loss: 0.9105 - accuracy: 0.5596 - val_loss: 0.8162 - val_accuracy: 0.6389

Epoch 7/50

10/10 [==============================] - 15s 1s/step - loss: 0.8699 - accuracy: 0.5997 - val_loss: 0.8693 - val_accuracy: 0.6111

Epoch 8/50

10/10 [==============================] - 15s 1s/step - loss: 0.8810 - accuracy: 0.5631 - val_loss: 0.7487 - val_accuracy: 0.6296

Epoch 9/50

10/10 [==============================] - 15s 2s/step - loss: 0.9104 - accuracy: 0.5920 - val_loss: 0.8055 - val_accuracy: 0.6481

Epoch 10/50

10/10 [==============================] - 15s 1s/step - loss: 0.8244 - accuracy: 0.6545 - val_loss: 0.6916 - val_accuracy: 0.6389

Epoch 11/50

10/10 [==============================] - 14s 1s/step - loss: 0.8204 - accuracy: 0.5736 - val_loss: 0.7244 - val_accuracy: 0.6759

Epoch 12/50

10/10 [==============================] - 15s 1s/step - loss: 0.8002 - accuracy: 0.6042 - val_loss: 0.6994 - val_accuracy: 0.6759

Epoch 13/50

10/10 [==============================] - 15s 1s/step - loss: 0.7963 - accuracy: 0.6109 - val_loss: 0.6925 - val_accuracy: 0.6944

Epoch 14/50

10/10 [==============================] - 15s 2s/step - loss: 0.7776 - accuracy: 0.6256 - val_loss: 0.6906 - val_accuracy: 0.6667

Epoch 15/50

10/10 [==============================] - 15s 1s/step - loss: 0.7601 - accuracy: 0.6347 - val_loss: 0.7725 - val_accuracy: 0.7222

Epoch 16/50

10/10 [==============================] - 15s 1s/step - loss: 0.8110 - accuracy: 0.5967 - val_loss: 0.6884 - val_accuracy: 0.6574

Epoch 17/50

10/10 [==============================] - 15s 1s/step - loss: 0.8265 - accuracy: 0.6259 - val_loss: 0.7566 - val_accuracy: 0.6111

Epoch 18/50

10/10 [==============================] - 15s 2s/step - loss: 0.7835 - accuracy: 0.6104 - val_loss: 0.6915 - val_accuracy: 0.6944

Epoch 19/50

10/10 [==============================] - 15s 1s/step - loss: 0.7489 - accuracy: 0.6186 - val_loss: 0.6400 - val_accuracy: 0.7315

Epoch 20/50

10/10 [==============================] - 15s 1s/step - loss: 0.7460 - accuracy: 0.6138 - val_loss: 0.6744 - val_accuracy: 0.7315

Epoch 21/50

10/10 [==============================] - 14s 1s/step - loss: 0.7281 - accuracy: 0.6571 - val_loss: 0.7921 - val_accuracy: 0.6852

Epoch 22/50

10/10 [==============================] - 15s 1s/step - loss: 0.7703 - accuracy: 0.6223 - val_loss: 0.7639 - val_accuracy: 0.6667

Epoch 23/50

10/10 [==============================] - 15s 2s/step - loss: 0.7861 - accuracy: 0.6272 - val_loss: 0.6806 - val_accuracy: 0.7037

Epoch 24/50

10/10 [==============================] - 15s 1s/step - loss: 0.7457 - accuracy: 0.6237 - val_loss: 0.6695 - val_accuracy: 0.7130

Epoch 25/50

10/10 [==============================] - 15s 1s/step - loss: 0.7641 - accuracy: 0.6205 - val_loss: 0.6924 - val_accuracy: 0.7315

Epoch 26/50

10/10 [==============================] - 15s 2s/step - loss: 0.7406 - accuracy: 0.6191 - val_loss: 0.6900 - val_accuracy: 0.7037

Epoch 27/50

10/10 [==============================] - 14s 1s/step - loss: 0.7118 - accuracy: 0.6426 - val_loss: 0.6520 - val_accuracy: 0.7315

Epoch 28/50

10/10 [==============================] - 15s 1s/step - loss: 0.7618 - accuracy: 0.6468 - val_loss: 0.6821 - val_accuracy: 0.7593

Epoch 29/50

10/10 [==============================] - 14s 1s/step - loss: 0.6980 - accuracy: 0.6587 - val_loss: 0.6199 - val_accuracy: 0.7778

Epoch 30/50

10/10 [==============================] - 15s 1s/step - loss: 0.7198 - accuracy: 0.6700 - val_loss: 0.7007 - val_accuracy: 0.7685

Epoch 31/50

10/10 [==============================] - 15s 1s/step - loss: 0.7100 - accuracy: 0.6959 - val_loss: 0.6786 - val_accuracy: 0.7685

Epoch 32/50

10/10 [==============================] - 15s 1s/step - loss: 0.7414 - accuracy: 0.6561 - val_loss: 0.8073 - val_accuracy: 0.6296

Epoch 33/50

10/10 [==============================] - 15s 2s/step - loss: 0.7585 - accuracy: 0.6529 - val_loss: 0.6342 - val_accuracy: 0.7593

Epoch 34/50

10/10 [==============================] - 14s 1s/step - loss: 0.7112 - accuracy: 0.6687 - val_loss: 0.6843 - val_accuracy: 0.7315

Epoch 35/50

10/10 [==============================] - 15s 1s/step - loss: 0.7205 - accuracy: 0.6610 - val_loss: 0.6242 - val_accuracy: 0.7685

Epoch 36/50

10/10 [==============================] - 15s 1s/step - loss: 0.6494 - accuracy: 0.7021 - val_loss: 0.6796 - val_accuracy: 0.7778

Epoch 37/50

10/10 [==============================] - 15s 1s/step - loss: 0.7280 - accuracy: 0.7147 - val_loss: 0.6326 - val_accuracy: 0.7130

Epoch 38/50

10/10 [==============================] - 15s 2s/step - loss: 0.6793 - accuracy: 0.6589 - val_loss: 0.6249 - val_accuracy: 0.8148

Epoch 39/50

10/10 [==============================] - 15s 2s/step - loss: 0.6699 - accuracy: 0.6635 - val_loss: 0.6244 - val_accuracy: 0.7130

Epoch 40/50

10/10 [==============================] - 15s 1s/step - loss: 0.7451 - accuracy: 0.6519 - val_loss: 0.6154 - val_accuracy: 0.7593

Epoch 41/50

10/10 [==============================] - 15s 2s/step - loss: 0.7205 - accuracy: 0.6430 - val_loss: 0.6957 - val_accuracy: 0.6574

Epoch 42/50

10/10 [==============================] - 15s 1s/step - loss: 0.6871 - accuracy: 0.6466 - val_loss: 0.6462 - val_accuracy: 0.7222

Epoch 43/50

10/10 [==============================] - 15s 2s/step - loss: 0.7044 - accuracy: 0.6156 - val_loss: 0.6402 - val_accuracy: 0.7407

Epoch 44/50

10/10 [==============================] - 15s 1s/step - loss: 0.6943 - accuracy: 0.6821 - val_loss: 0.6227 - val_accuracy: 0.7500

Epoch 45/50

10/10 [==============================] - 15s 2s/step - loss: 0.6670 - accuracy: 0.6832 - val_loss: 0.6030 - val_accuracy: 0.7870

Epoch 46/50

10/10 [==============================] - 15s 1s/step - loss: 0.6636 - accuracy: 0.7129 - val_loss: 0.5931 - val_accuracy: 0.7593

Epoch 47/50

10/10 [==============================] - 15s 2s/step - loss: 0.6279 - accuracy: 0.7117 - val_loss: 0.6102 - val_accuracy: 0.7593

Epoch 48/50

10/10 [==============================] - 15s 2s/step - loss: 0.6526 - accuracy: 0.7133 - val_loss: 0.6281 - val_accuracy: 0.7037

Epoch 49/50

10/10 [==============================] - 15s 1s/step - loss: 0.6887 - accuracy: 0.6929 - val_loss: 0.5941 - val_accuracy: 0.7500

Epoch 50/50

10/10 [==============================] - 15s 1s/step - loss: 0.6171 - accuracy: 0.7128 - val_loss: 0.5912 - val_accuracy: 0.7778

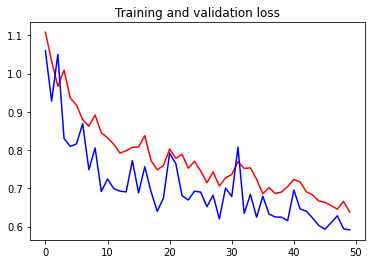

(5) model evaluate (Loss & Accuracy)

In [ ]:

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, loss, 'r')

plt.plot(epochs, val_loss, 'b')

plt.title ('Training and validation loss')

Out[ ]:

Text(0.5, 1.0, 'Training and validation loss')

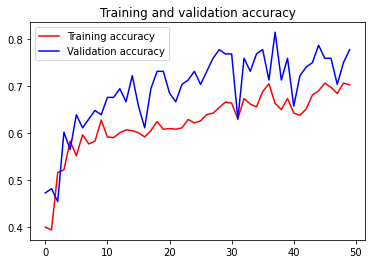

In [ ]:

plt.plot(epochs, acc, 'r', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc=0)

plt.figure()

plt.show()

<Figure size 432x288 with 0 Axes>모델 학습 후기

- 데이터의 양이 많지 않았고 데이터가 여러 인물이 섞여있는 경우도 있고 사진마다 캐릭터의 상태가 많이 바뀌는 경우가 많아서 성능이 좋지 않다.

- 더 많은 데이터와 깔끔한 데이터를 사용해야한다.

- 다른 캐릭터들도 추후에 진행해보겠다.

- github 링크

https://github.com/Hm-source/Marvel_heroes_Classification

728x90

반응형